Automate Deployment of React apps to AWS S3 using a CD Pipeline

The Problem

Recently, I have been working on a project with a static site developed using React as it’s frontend. The rest of the project uses AWS services heavily, so I went with S3 for hosting the static React frontend, and a CloudFront distribution as a CDN ahead of it. Each time I wanted to push a deploy, I had to manually upload all the build files to the S3 bucket and also ensure there was some versioning involved since CloudFront effectively caches your site and if you push something new, you have to invalidate the old cache. Doing this every time I wanted to push was a headache, so I set up a simple CI/CD pipeline that automates all this.

Hold on, what’s CI/CD?

CI/CD (Continuous Integration / Continuous Delivery) automates the deployment process. A CI/CD pipeline can build, test and deploy the code automatically each time we “do something” (this may be committing to the repo / making a pull request, etc). CI is the process of building our code and running any unit tests on it. This ensures there is no integration error due to the new changes. In CD phase, the code is deployed after running additional tests.

In my case, the project was in initial stages and there were no tests involved, so all I had to do was automate the build and deploy parts. In this post, I have documented how I did this.

The Solution

First off, let’s see what our requirements are. What do we need to automate? Well, when a commit is pushed to a repository, we expect our pipeline to –

- Build the React code using configuration mentioned in package.json

- Upload these build files to our S3 bucket (it should replace the previous files)

- Our CloudFront distribution should somehow be aware of these changes are start serving the new files instead of the old ones.

Alright, let’s get into the specifics now. We are going to use AWS CodePipeline to achieve these goals. It is a CD service. It automatically triggers when you update the source repo and passes the changes we did through 3 stages.

- Source Stage - Here, it pulls the new changes from our repo.

- Build Stage – Here, it installs dependencies and builds our code. If you want to do any automated tests, you can do them here. We will be using CodeBuild for building our code

- Deploy Stage – Here, it deploys the changes to our production environment.

Instead of AWS CodeCommit (which is a git service by AWS), we will be using GitHub as the Source for our code.

Also, I already had a S3 bucket and a linked CloudFront distribution ready. If you don’t, follow this link for instructions for the same.

Note: Make sure you make the CodePipeline in the same region that you’re s3 bucket is. I made a mistake of creating them in two separate regions, and then had to delete it and create again!

Step 1: Make sure you have updated code in your GitHub Repo

Step 2: Create a new CodeBuild project.

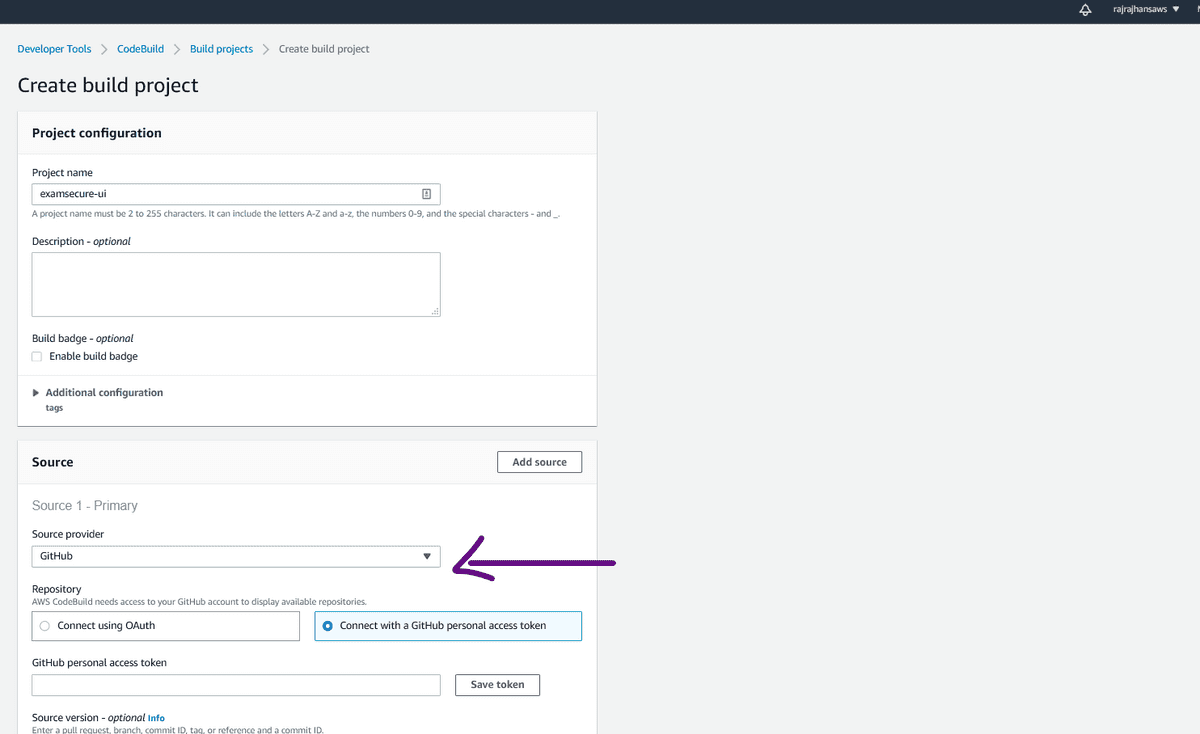

Select the “Source” as GitHub. You have options to Connect using OAuth or using the Github Personal Token we just created. After you connect, you can enter the Repository URL.

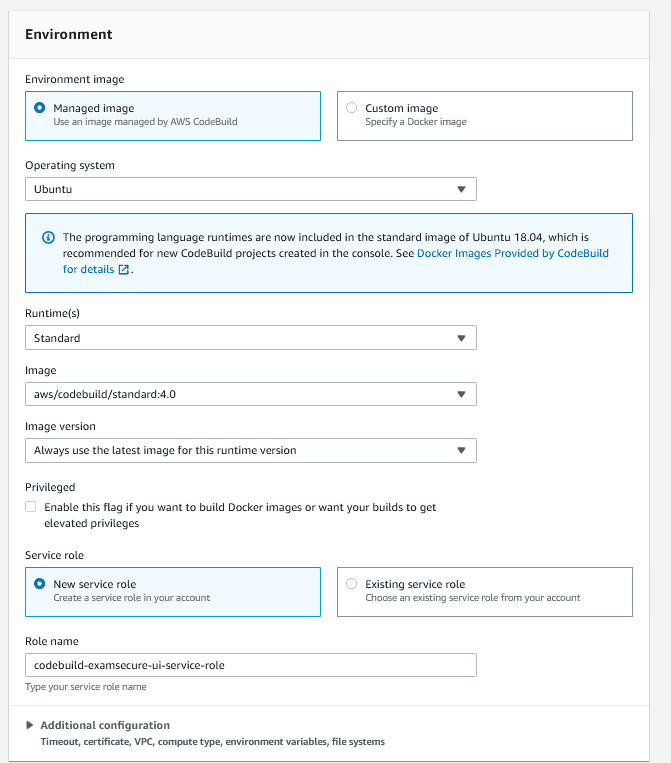

Once the repository is connected, we can specify the Environment settings. You can go with the default ones suggested by AWS without facing any issue. Following is what I selected –

Next, you have to specify the Build Specifications. This is the part where the whole magic is going to happen. When it spins up a CPU instance for us with the specified environment, it has to “do something” (for example – executing npm run build) to actually build our project. That “doing something” part is specified in the BuildSpec.

CodeBuild automatically detects a buildspec.yml file from your project root and takes that as the build specification. Let’s understand the buildspec used in our case.

The specification includes four phases –

- Install

- Getting the specified environment set up

- pre_build

- Final commands to execute before build

- In our case, this will be running npm install to install all our dependencies

- build

- actual build commands

- In our case, this will be

npm run build

- post_build

- any cleanup tasks

- In our case, we will push the resulting build files to S3 and tell our Cloudfront distribution to invalidate index.html and service-worker.js, which will make sure that Cloudfront distributes our latest files.

Following is the buildspec.yml file that I used. You can see the comments to get an idea about what each individual command does.

version: 0.1 phases: pre_build: commands: - echo Installing source NPM dependencies... - npm installbuild: commands: - echo Build started on `date` - npm run build post_build: commands: # copy thecontents of /build to S3 - aws s3 cp --recursive --acl public-read ./builds3://edi-ty52-webuibucket-u9l4ezvzdnvt/ # set the cache-control headers for service-worker.js toprevent browser caching - > aws s3 cp --acl public-read --cache-control="max-age=0, no-cache,no-store, must-revalidate" ./build/service-worker.js s3://edi-ty52-webuibucket-u9l4ezvzdnvt/ # setthe cache-control headers for index.html to prevent browser caching - > aws s3 cp --acl public-read--cache-control="max-age=0, no-cache, no-store, must-revalidate" ./build/index.htmls3://edi-ty52-webuibucket-u9l4ezvzdnvt/ # invalidate the CloudFront cache for index.html andservice-worker.js to force CloudFront to update its edge locations with the new versions - > awscloudfront create-invalidation --distribution-id E3552Z2O0OJKV0 --paths /index.html/service-worker.js artifacts: files: - '**/*' base-directory: buildAfter this, the last step is to select your S3 bucket as the “Artifact”

After these steps, click on “Create Build Project”.

Great! Now our build project is ready. Let’s actually create a pipeline using CodePipeline which will use this build project we have created.

Step 1 – Create new Pipeline by going to CodePipeline > New Pipeline

Step 2 – Choose basic pipeline settings like Name, description, etc.

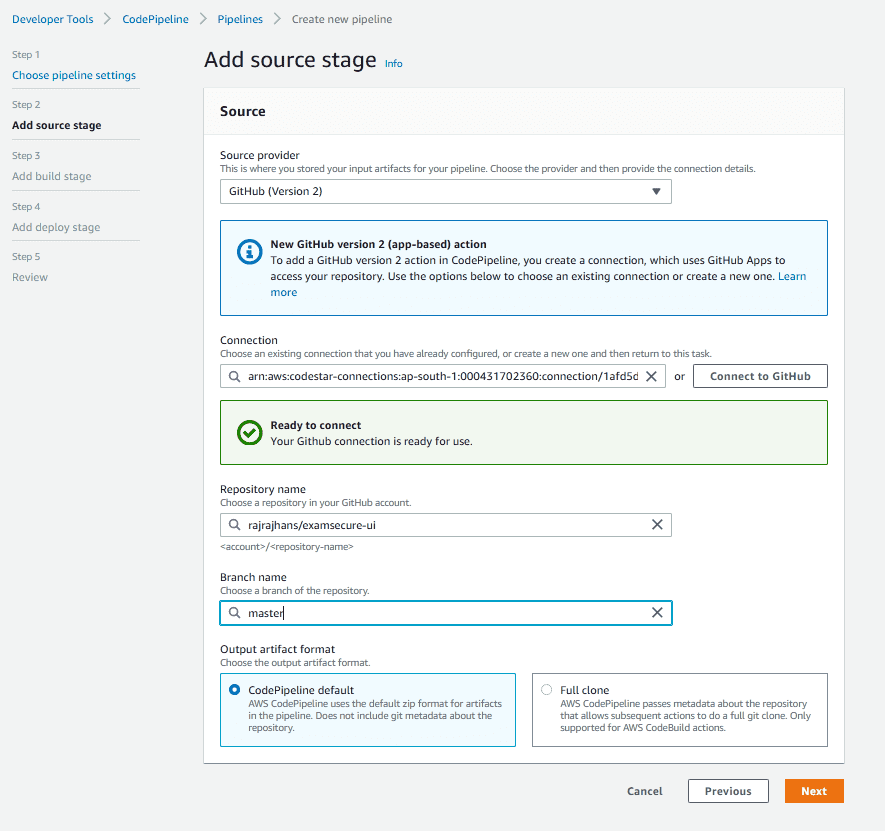

Step 3 – Add Source Stage. Here, we will select our Github Repo. Here is a screenshot of this step completed

Step 4 – Add Build Stage

- In this stage, you have to select the CodeBuild project we just created previously. You can also mention any environment variables your project might need.

- You can skip deploy stage, since we have already included pushing files to s3 and invalidating Cloudflare cache in the post build step of our CodeBuild project.

After creating the pipeline, it will get triggered and run for the first time. After it has run, you should see something like this –

If you face any errors at this stage, ensure two things –

- Your app build without any errors on your PC

- The “CodePipeline” and “CodeBuild” Service Roles have been given the required permissions to access your S3 bucket. To ensure this, you can go to AWS Management Console Home > IAM and grant the required permissions

That’s it. We are done! After this, whenever you will push to your repo, the CodePipeline we just created will get triggered, build our project using the CodeProject, and deploy to S3 and CloudFront. Pretty cool, isn’t it!

Hope this was helpful, thanks for reading!

References