Table of Contents

This blog post contains the notes I made while trying to understand how digital video actually works, and what goes on behind it. It is mostly a broader overview of the topic, and I plan to dive deep into topics that interest me in the upcoming blog posts. This one will help you understand the concepts of digital media.

What is digital media, really?

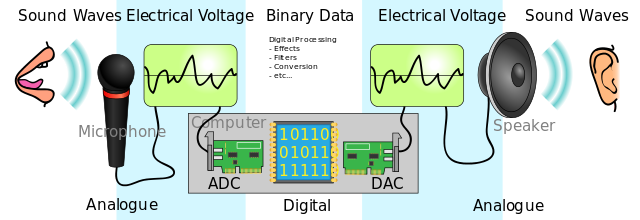

Audio → what you listen to

Video -> what you see

Digital media (or a “video” in common terms) is combination of “video”, and “audio” , where video is basically a grouping of ordered digital pictures (or “frames”) captured at a given frequency (say, 30 frames per second), and a digital audio track. Later, when the content will be played, the device could display all the frames in sequence, each one in a 33 ms interval (1000 ms / 30 fps), and thus we’ll perceive it as a movie.

The problem: raw video & audio are too big in size!

Consider a 5 seconds long media.

- Raw Audio:

- Five seconds of raw 5.1 audio: 6 channels, 48khz, 16 bits uses around 2.8MB

6 channels * 48000 hz * 5 seconds * 2 bytes = 2.8MB

- Raw Video:

- Considering 1080p resolution (1920 x 1080), and 24 bits per pixel (8 bit R, 8 bit G, and 8 bit B), and 60 fps (frames per second)

1920 * 1080 * 3 bytes * 5s * 60fps = 1.7 GB

So 5 second of 1080p 60fps raw media is taking up around 1.7 GB of space. If used this way, distribution of media will become impossible as it will take huge amounts of bandwidth to transfer. It is unfeasible to use uncompressed media. Therefore, we compress the media using a codec.

Codecs

A codec is basically a piece of software which can significantly reduce the size of a media without much loss in it’s quality. Question is, how does it do that?

Encoding is the process of taking a raw uncompressed media file and converting it into a compressed one. Our 1.7GB video would be compressed to around 2MB using H264 codec. Encoding exploits the way our vision works and removes redundant data in the media that our eyes cannot differentiate any way. If you are curious and want to know more about this, go here .

Decoding is the reverse process that needs to be done when the video is played. So, if you are compressing a video using a certain codec, the media player that you will use to play that video needs to support the codec in order to decompress the video.

Containers

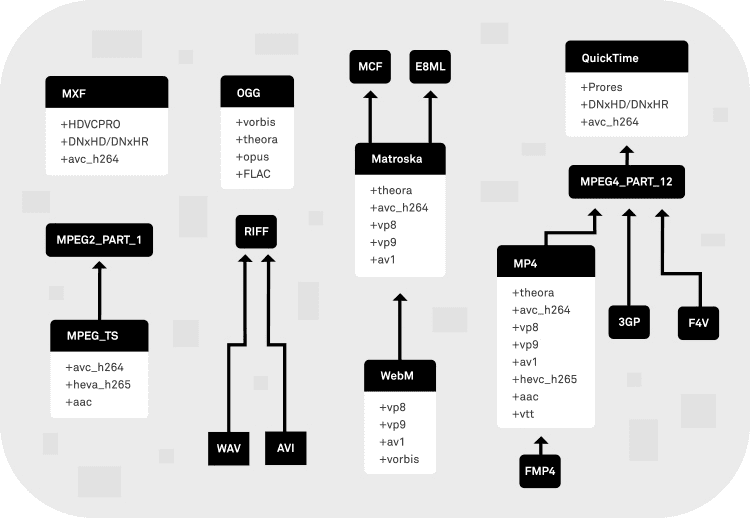

Container is the way how audio & video co-exists together in a single file. Once we have compressed the individual audio and video streams, we wrap them into a file which we call a “container”. It contains both the audio, and video streams, and the general metadata, such as title, resolution, multiple audio tracks, subtitle tracks, etc. mp4, webm, mkv are all the different container formats. Each have their own way (codecs) of storing video & audio data. For example, mp4 container generally stores the audio using aac codec, and the video using the h264 codec.

Common Media Processing Operations

Here, we will take a look at what operations are commonly done related to media processing. We will commonly encounter this terminology and should know what it means, and why is it needed.

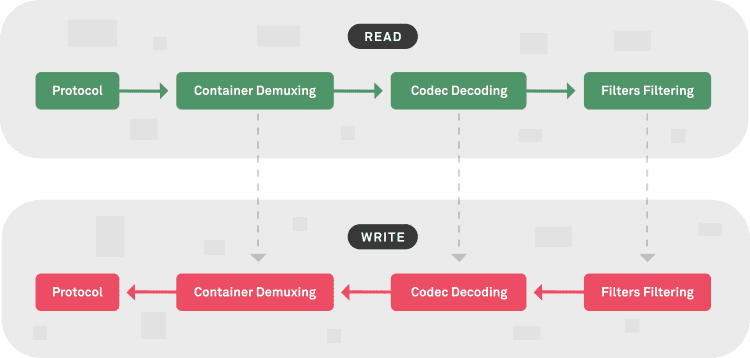

We can refer to the FFMPEG internal architecture to see what phases are involved in making any changes to a media file.

When ffmpeg makes any change to a media file, it -

- starts by reading / writing it through / to a protocol (your file system, http, udp, etc)

- then, you can read / write (called “muxing” or “demuxing”) the container (mp4, webm, mkv, etc)

- now, you can read / write (called “decoding” or “encoding”) the codecs (h264, aac, av1, etc)

- finally, you can expose the media streams in their raw formats (ex: rgb, pcm, etc). once you have the raw format, all sorts of processing can be done with it.

Let’s see the terminology used for doing some common operations on media →

Transcoding

- Transcoding is the process of converting a media from codec A to B.

- Examples of some popular codecs → H264 (AVC), H265 (HEVC), AV1, AAC, OPUS.

- Transcoding is needed because of a lot of reasons

- First, for compatibility. Some devices / video players / editing softwares might not support certain codecs. You can transcode the media into a codec that is supported.

- Second, you transcode a media for better compression, higher resolution, better framerates, etc. Some newer codecs have new features like HDR, 3D sound that older ones do not.

- Third, some codecs are not exactly free to use for all purposes. So, if you are using something for commercial use, you might transcode your media into a free and open source codec to avoid patent costs, etc.

Transrating

- Transrating is the process of changing the bitrate of a media, with a goal to reduce it’s file size.

- Bitrate is the amount of bytes required per unit of time.

- For a media, there are three things you have to balance

- Quality: how “good” the final media looks

- Speed: how much time / processing power is needed to play / process the media

- Space: the media’s file size

- We usually need to favor two of these features at a given time. It means that if we want quality media and fewer bytes, we’ll need to give it more CPU time.

- Based on the context and the requirements, you can do a partial compromise between these three.

Transsizing

- Transsizing is the process of changing the resolution of a media, scaling it up or down.

- Transsizing works by re encoding the media.

Transmuxing

- Transmuzing is the process of changing the media format container.

- For example, changing a MPEG-ts (generally the format that cameras shoot in) to mp4, while keeping the audio and video streams intact.

- On similar lines, muxing is the process of adding one / more codec streams into a container format, and demuxing is extracting a codec stream from a container format.

- Multiplexing is the process of interweaving audio and video into one data stream, and the reverse of that is demultiplexing, which is the process of extracting an elementry stream from a media container.

That’s it for this post, knowing all these basics helped me understand and participate in conversations that involved media processing. I hope this has been helpful to you as well, see you the next time!